Most AI projects die deep in the org chart.

I just came out of the AI Innovators panel at INSEAD’s AI Forum Americas in San Francisco. The message from the stage was clear: The barrier to AI adoption isn’t technology. It’s management.

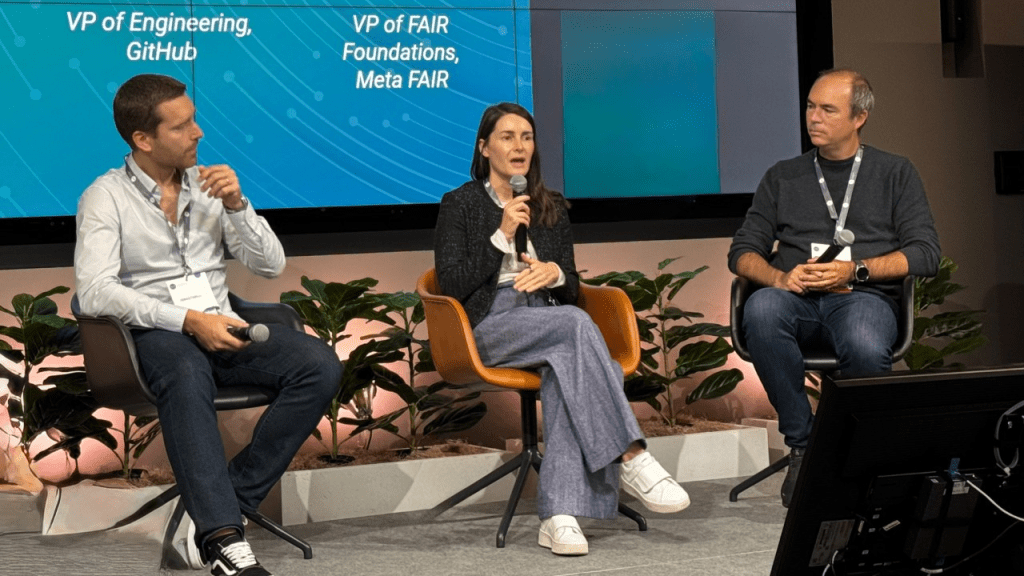

On stage were:

Gemma Garriga (VP Engineering, GitHub)

Sebastian Bak (Global Co-Lead for AI, BCG X)

Stephane Kasriel (VP FAIR Foundations, Meta FAIR)

All three said it in their own way: the models are ready. The math is cheap. The problem is us.

1. Most budgets are backwards

Sebastian didn’t mince words: “Budget 30% for development and 70% for change management.”

That’s the opposite of how most executives spend today. We still treat AI as an IT project when it’s actually an organizational transformation. Training, incentives, redesigning workflows — this is where adoption lives or dies. Ignore it, and your AI pilot ends up as another shelfware slide deck.

2. CFOs don’t care about your demo

The finance test is simple: show gains in the group that actually adopted your solution. Not promises. Not a POC. Cash in the bank.

If you can’t prove impact at the cohort level, you don’t have a business case — you have theater.

3. Move fast and DON’T break things

Gemma’s reminder: building fast is easy, integrating well is hard. AI projects crash when they move from prototype to production. The fix is discipline: break work into small tasks, measure what the AI touched, track how long it takes code to move from pull request to production. Ship small. Prove safe. Scale.

4. Quick wins and moonshots must coexist

Stephane compared AI to pharma: many bets, many failures, huge costs. The CFO wants a 90-day deliverable that proves value. The board wants a moonshot that reimagines the company in an AI-first world. You need both. Quick wins earn credibility. Moonshots earn the future.

5. Managers need a new job description

Hierarchies slow everything down. In an age of agentic AI, the manager role shifts. Less traffic cop, more architect. Their job: set guardrails, define success metrics, and remove blockers. Not “what did you do this week” but “what did the system learn and ship.”

6. Costs are collapsing, but value is elsewhere

The cost of running last year’s top model has already dropped by orders of magnitude. That’s not where the margin is. Value accrues at the solution layer — the companies solving painful, specific problems that users will pay for today. Infrastructure will be cheap. Adoption will not.

Takeaway

Most AI projects don’t fail in the lab. They fail in the org chart.

If you want to win with AI:

• Pick one workflow that matters.

• Prove adoption and cash impact in 90 days.

• Fund change management like you mean it.

• Run one moonshot in parallel.

• Redefine management around learning, not reporting.

The model race makes headlines. The culture race decides who survives.

About the Author:

Daniel Perry is a Silicon Valley-based start-up founder — and advisor to investors, boards & CEOs — connecting sustainability, technology & impact.